Vision-Position Multi-Modal Beam Prediction

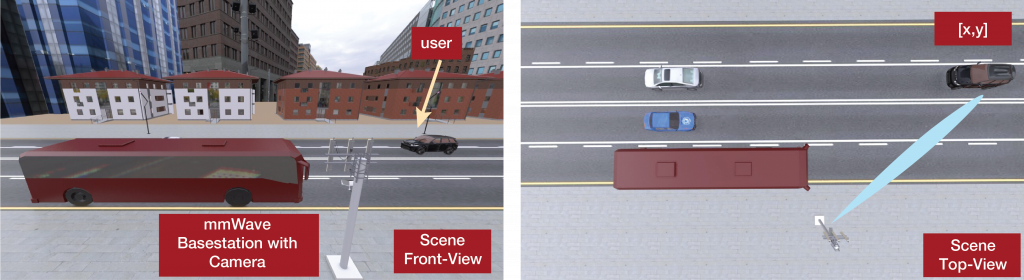

This figure illustrates the considered system and highlights the value of leveraging the positional and visual/camera side information for efficient mmWave/THz beam prediction

Key ideas

- Instead of conventional mmWave beam training in 5G/B5G systems associated with a large training overhead, in this paper, we propose utilizing prior observation and additional sensory data such as RGB images and practical GPS measurements.

- Both visual data and GPS measurements have certain limitations. For example, the positional error in publicly available GPS data can range between 1-5 meters, and the camera/visual data is sensitive to lighting/weather conditions. In order to develop a robust and reliable solution, we develop a machine learning-based framework to leverage both data modalities.

Applications

- Prediction of mmWave/THz beams in high-mobility real-world scenarios

More information about this research direction

Paper: Gouranga Charan, Tawfik Osman, Andrew Hredzak, Ngwe Thawdar, and Ahmed Alkhateeb, “Vision-Position Multi-Modal Beam Prediction Using Real Millimeter Wave Datasets,” arXiv preprint arXiv:2111.07574 (2021).

Abstract: Enabling highly-mobile millimeter wave (mmWave) and terahertz (THz) wireless communication applications requires overcoming the critical challenges associated with the large antenna arrays deployed at these systems. In particular, adjusting the narrow beams of these antenna arrays typically incurs high beam training overhead that scales with the number of antennas. To address these challenges, this paper proposes a multi-modal machine learning based approach that leverages positional and visual (camera) data collected from the wireless communication environment for fast beam prediction. The developed framework has been tested on a real-world vehicular dataset comprising practical GPS, camera, and mmWave beam training data. The results show the proposed approach achieves more than ≈ 75% top-1 beam prediction accuracy and close to 100% top-3 beam prediction accuracy in realistic communication scenarios.

@misc{Charan2021,

title={Vision-Position Multi-Modal Beam Prediction Using Real Millimeter Wave Datasets},

author={Charan, Gouranga and Osman, Tawfik and Hredzak, Andrew and Thawdar, Ngwe and Alkhateeb, Ahmed},

year={2021},

eprint={2111.07574},

archivePrefix={arXiv},

primaryClass={eess.SP}}

To reproduce the results in this paper:

Simulation codes:

Coming soon

These simulations use the DeepSense scenarios:

Scenario "5"

Scenario "6"

Example: Steps to generate the results in this figure

- coming soon