Computer Vision Aided Beam Tracking in a Real-World Millimeter Wave Deployment

Shuaifeng Jiang1 and Ahmed Alkhateeb1

1Wireless Intelligence Lab, ASU

Our machine learning-based solution exploit the camera sensing information of the wireless environment to efficiently guide mmWave/THz beam selection and beam tracking. The proposed solution is demonstrated in a real-world mmWave system.

Abstract

Millimeter-wave (mmWave) and terahertz (THz) communications require beamforming to acquire adequate receive signal-to-noise ratio (SNR). To find t he o ptimal beam, current beam management solutions perform beam training over a large number of beams in pre-defined c odebooks. The beam training overhead increases the access latency and can become infeasible for high-mobility applications. To reduce or even eliminate this beam training overhead, we propose to utilize the visual data, captured for example by cameras at the base stations, to guide the beam tracking/refining process. We propose a machine learning (ML) framework, based on an encoderdecoder architecture, that can predict the future beams using the previously obtained visual sensing information. Our proposed approach is evaluated on a large-scale real-world dataset, where it achieves an accuracy of 64.47% (and a normalized receive power of 97.66%) in predicting the future beam. This is achieved while requiring less than 1% of the beam training overhead of a corresponding baseline solution that uses a sequence of previous beams to predict the future one. This high performance and low overhead obtained on the real-world dataset demonstrate the potential of the proposed vision-aided beam tracking approach in real-world applications.

Proposed Solution

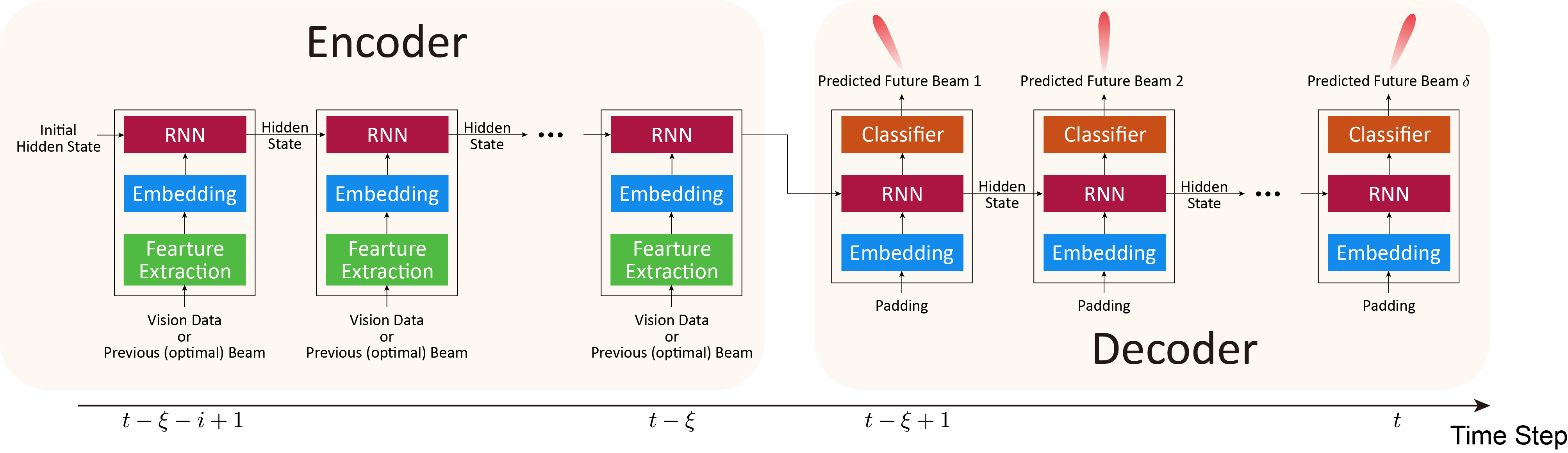

This figure presents the proposed framework for vision-aided beam tracing. The BS senses the environment and the moving UE with an RGB camera. The visual sensing information is then processed by an RNN model to predict optimal beams for the current and future time steps at the base station.

DeepSense 6G Dataset

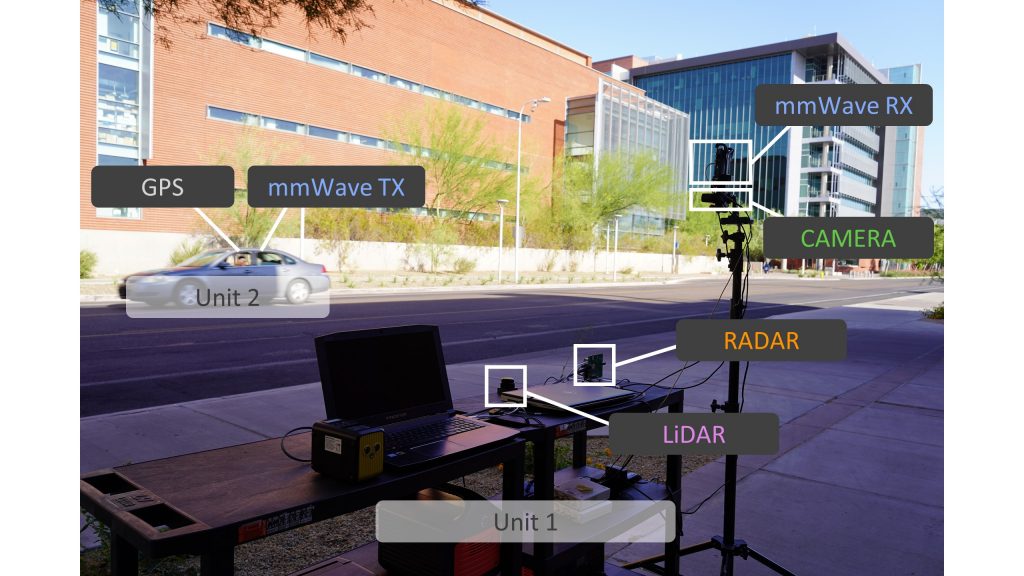

DeepSense 6G is a real-world multi-modal dataset that comprises coexisting multi-modal sensing and communication data, such as mmWave wireless communication, Camera, GPS data, LiDAR, and Radar, collected in realistic wireless environments. Link to the DeepSense 6G website is provided below.

Scenario

In this beam tracking task, we build development/challenge datasets based on the DeepSense data from scenario 8. For further details regarding the scenarios, follow the links provided below.

Citation

A. Alkhateeb, G. Charan, T. Osman, A. Hredzak, J. Morais, U. Demirhan, and N. Srinivas, “DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Datasets,” to be available on arXiv, 2022. [Online]. Available: https://www.DeepSense6G.net

@Article{DeepSense,

author = {Alkhateeb, A. and Charan, G. and Osman, T. and Hredzak, A. and Morais, J. and Demirhan, U. and Srinivas, N.},

title = {{DeepSense 6G}: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset},

journal={to be available on arXiv},

year = {2022},

url = {https://www.DeepSense6G.net},}

@inproceedings{jiang2022computer,

title={Computer vision aided beam tracking in a real-world millimeter wave deployment},

author={Jiang, Shuaifeng and Alkhateeb, Ahmed},

booktitle={2022 IEEE Globecom Workshops (GC Wkshps)},

pages={142–147},

year={2022},

organization={IEEE}}