User Identification: The Key Enabler for Multi-User Vision-Aided Wireless Communications

Available on ArXiv

Gouranga Charan and Ahmed Alkhateeb

Wireless Intelligence Lab

Arizona State University

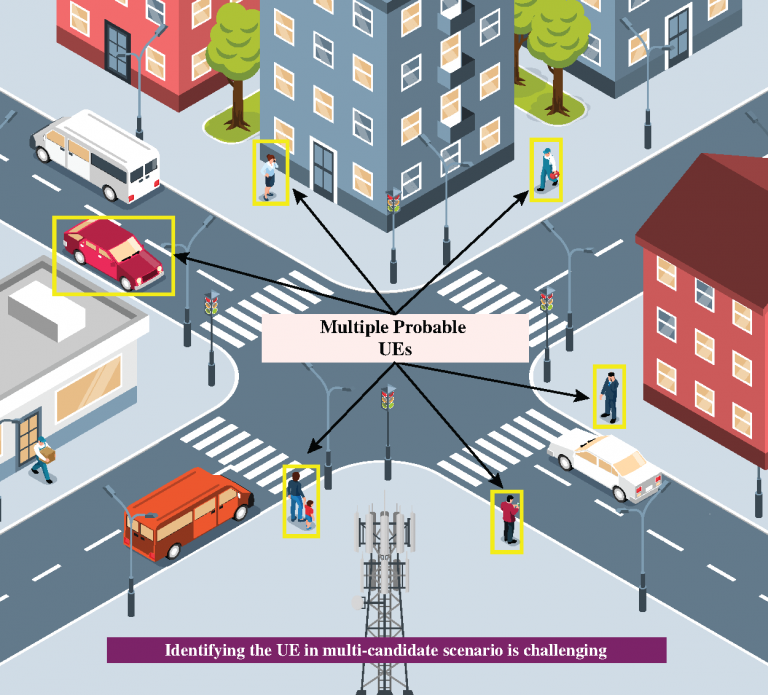

This figure illustrates the challenges associated with sensing-aided user identification in a multi-candidate scenarios. As shown in the figure, any one or more of the highlighted objects can be the user(s).

Abstract

Vision-aided wireless communication is attracting increasing interest and finding new use cases in various wireless communication applications. These vision-aided communication frameworks leverage the visual data captured, for example, by cameras installed at the infrastructure or mobile devices, to construct some perception about the communication environment (geometry, users, scatterers, etc.). This is typically achieved through the use of deep learning and advances in computer vision and visual scene understanding. Prior work has investigated various problems such as vision-aided beam, blockage, and hand-off prediction in millimeter wave (mmWave) systems and vision-aided covariance prediction in massive MIMO systems. This prior work, however, has focused on scenarios with a single object (user) moving in front of the camera. To enable vision-aided wireless communication in practice, it is important for these systems to be able to operate in crowded scenarios with multiple objects in the visual scene. In this paper, we define the user identification task as the key enabler for realistic vision-aided wireless communication systems that can operate in crowded scenarios and support multi-user applications. The objective of the user identification task is to identify the target communication user from the other candidate objects (distractors) in the visual scene. We develop machine learning models that process either one frame or a sequence of frames of visual and wireless data to efficiently identify the target user in the visual/communication environment. Using the large-scale multi-modal sense and communication dataset, DeepSense 6G, which is based on real-world measurements, we show that the developed approaches can successfully identify the target users with more than 97% accuracy in realistic settings. This paves the way for scaling vision-aided wireless communication applications to real-world scenarios and practical deployments.

Proposed Solution

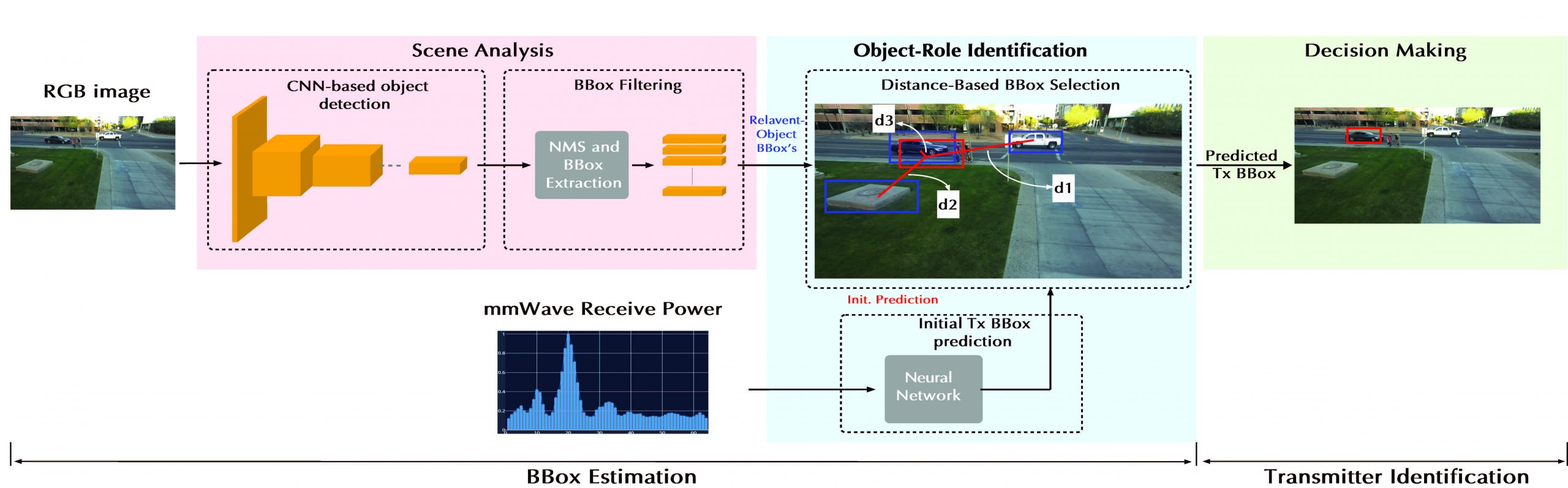

The figure presents the proposed single sample-based transmitter identification model that leverages both visual and wireless data to predict the transmitter in the scene.

Video Presentation

DeepSense 6G Dataset

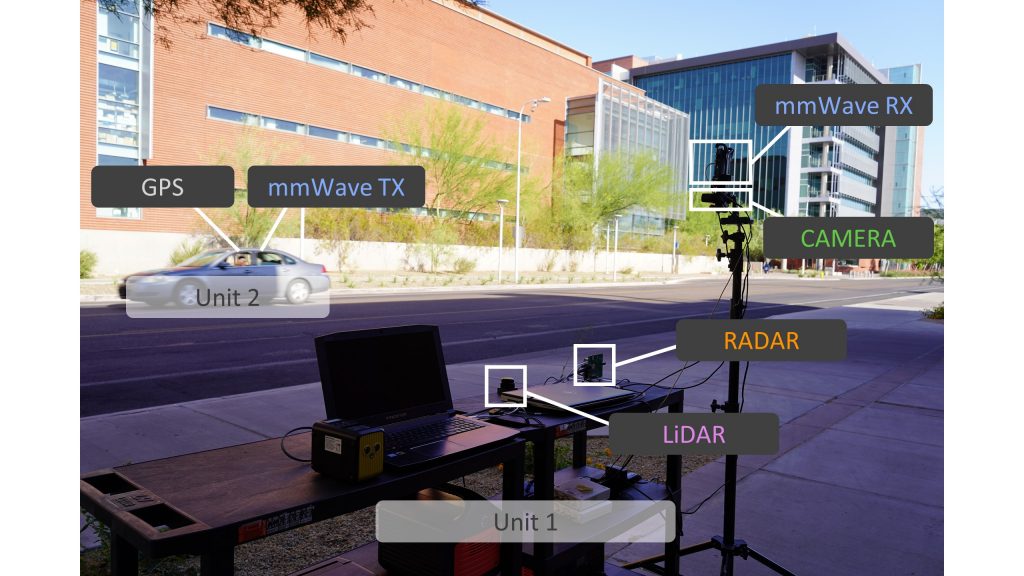

DeepSense 6G is a real-world multi-modal dataset that comprises coexisting multi-modal sensing and communication data, such as mmWave wireless communication, Camera, GPS data, LiDAR, and Radar, collected in realistic wireless environments. Link to the DeepSense 6G website is provided below.

Scenarios 1, 3 and 4

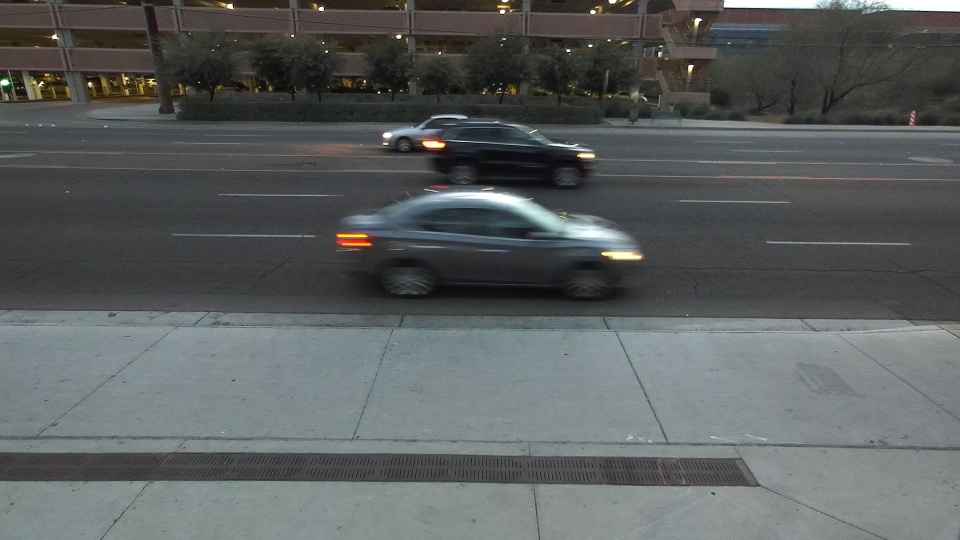

In this figure, we present the different image samples of scenarios 1, 3, and 4. It shows the different lighting conditions (day, dusk, and night) in which the dataset was collected, highlighting the diversity in these scenarios.

Citation

A. Alkhateeb, G. Charan, T. Osman, A. Hredzak, J. Morais, U. Demirhan, and N. Srinivas, “DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Datasets,” IEEE Communications Magazine, 2023.

@Article{DeepSense,

author={Alkhateeb, Ahmed and Charan, Gouranga and Osman, Tawfik and Hredzak, Andrew and Morais, Joao and Demirhan, Umut and Srinivas, Nikhil},

title={DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset},

journal={IEEE Communications Magazine},

year={2023},

publisher={IEEE}}

G. Charan and A. Alkhateeb, “User Identification: The Key Enabler for Multi-User Vision-Aided Wireless Communications,” available on arXiv, 2022.

@Article{charan2022user,

title={User identification: The key enabler for multi-user vision-aided wireless communications},

author={Charan, Gouranga and Alkhateeb, Ahmed},

journal={arXiv preprint arXiv:2210.15652},

year={2022}}