DeepMIMO: The Data Foundation for

Wireless AI Created by ASU Researchers

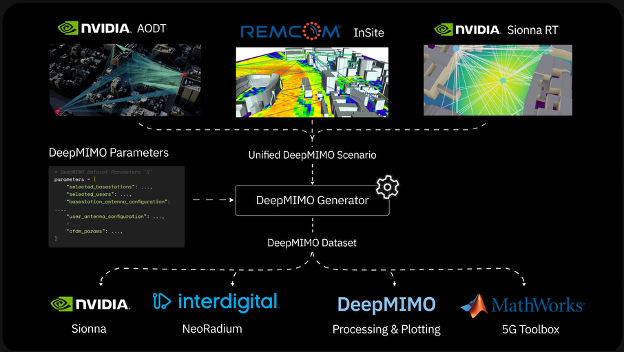

Integrates with ray tracing and 5G simulation tools from NVIDIA,

MathWorks and Remcom

1. AI-Native Wireless: From Equations to Intelligence

For most of its history, wireless communication advanced through equations. Engineers modeled how signals travel, how antennas radiate, and how noise interferes. These models powered radios, televisions, and the first generations of mobile phones. They were elegant and effective, but also limited.

Today’s wireless networks face demands far beyond what those models were built for. Billions of devices connect at once—smartphones, cars, sensors, and machines—each moving, reflecting signals, and competing for spectrum. At the same time, new applications are raising the bar: autonomous driving requires centimeter-level positioning, virtual and augmented reality require ultra-low latency, and smart cities demand reliable connections across dense, complex environments.

Traditional formulas struggle to keep up with this scale and diversity. This is where artificial intelligence becomes essential. Instead of relying only on handcrafted equations, AI learns patterns directly from data. With enough examples, AI models can predict how signals will behave in a crowded city, estimate a user’s location, or adapt to changing conditions in real time. This shift defines AI-native wireless: networks that sense, learn, and optimize themselves, unlocking applications that conventional methods cannot support.

2. Wireless AI’s Most Difficult Challenge: Data

Machine learning runs on data the way engines run on fuel. In wireless, that data means detailed measurements of how signals travel from transmitters to receivers in real environments. But collecting those measurements is harder than it sounds.

Real-world experiments require expensive hardware, careful synchronization across antennas, and precise calibration. They demand thousands of measurements taken at fine spatial resolutions, which in turn consume vast amounts of man-hours. Even after this effort, the data reflects only one location at one moment in time. Move a car, add a building, or change the weather, and the measurements shift. Reproducing the experiment becomes nearly impossible.

To avoid these costs, researchers often turn to mathematical or stochastic models that generate wireless channels quickly. These models scale, but they gloss over the rich details of reality. They rarely distinguish between an urban canyon and an open campus, and AI trained on them may fail in deployment.

Physics offers two alternatives. The most accurate option solves Maxwell’s equations directly, but such full-wave solvers are practical only at very small scales—they can take centuries of compute time for environments spanning meters. At the other end is geometric optics, which approximates how radio waves reflect, diffract, and scatter. The most advanced form of this for wireless is ray tracing, which can simulate signals across realistic, city-scale environments. Ray tracing delivers site-specific realism at scale, but it demands both expertise and heavy computation, keeping it out of reach for many researchers – until now.

This tension—between realism, scalability, and reproducibility—defines the central challenge of AI research in wireless. To unlock the promise of AI-native networks, we need data that is both realistic and accessible.

3. DeepMIMO: A Shared Foundation for Wireless AI

To fulfill the data needs of wireless researchers, DeepMIMO, a toolchain and a database of ray tracing datasets, emerged with a simple mission to make ray tracing accessible to everyone.

Let’s clarify the two publicly available components:

Why a Database?

Ray tracing produces the most realistic large-scale wireless data available today, but running these simulations demands specialized software, high-end hardware, and often days of compute—some scenarios can take more than a week. DeepMIMO’s database provides a curated, versioned collection of pre-computed, ray-traced datasets covering diverse environments, and distributed in a unified schema with rich scene metadata. Researchers can download and use immediately—no supercomputer required—and, because the format and repository are shared, results are reproducible across groups. This common base has already enabled a growing body of work (750+ citations and climbing), letting researchers replicate findings and build directly on prior results. The DeepMIMO team reports the database has more than 150 ray tracing scenarios and should scale to thousands in the coming months.

Why a Toolchain?

Even with data available, the ecosystem was fragmented: different ray tracers emit incompatible outputs; connecting them to simulators and ML workflows involved brittle, one-off scripts that hinder reuse and reproducibility. DeepMIMO’s toolchain is a lightweight Python stack that standardizes and connects this pipeline. It consists of:

-

-

Converters that normalize outputs from multiple ray tracing engines into the DeepMIMO format,

-

Channel generators that compute wireless channels using parameterized scenes and sampling plans (arrays, carriers, user distributions), and

-

Downstream integrations that export/adapt data for common simulators and ML frameworks.

-

This turns a previously disconnected landscape into an end-to-end, repeatable workflow that runs in notebooks, on laptops, or in the cloud—without special hardware or OS requirements.

Free, open-source, and community-driven, DeepMIMO has become more than just a dataset. It is a shared foundation that lowers the barrier to wireless AI research while connecting researchers to each other and to the broader ecosystem of simulation tools.

4. How DeepMIMO Connects to Tools and Applications

DeepMIMO is more than a dataset—it is being used within AI-RAN Alliance and is rapidly becoming the standard for ray tracing datasets. Its development reflects a close collaboration with two of the largest players in wireless simulation: NVIDIA and Remcom. By bringing together cutting-edge ray tracing engines with an open and reproducible framework, DeepMIMO has set the benchmark for how wireless AI research can be shared and scaled.

As Professor Ahmed Alkhateeb, who leads the Wireless Intelligence Lab, often emphasizes:

“DeepMIMO enables researchers to spend less time generating data and more time building models, which accelerates the pace of innovation.”

This shift makes wireless AI research more inclusive. Anyone—from a graduate student with a laptop to a company with access to GPUs—can begin from the same foundation and scale up as needed.

Some of the applications DeepMIMO supports include:

-

-

Beam selection and channel prediction: AI models can learn to choose the best signal paths in complex environments, improving speed and reliability.

-

Localization: Datasets link signals with precise user positions, enabling centimeter-level positioning for autonomous driving or robotics.

-

Blockage prediction: AI can forecast when a signal will be blocked by obstacles such as buildings or vehicles, allowing networks to adapt proactively.

-

Sensing-assisted communications: Through projects such as DeepVerse, datasets now combine radio signals with sensing modalities (e.g., cameras, LiDAR, radar) to support integrated sensing and communications (ISAC).

-

Dataset benchmarking: Researchers can evaluate algorithms consistently across the same scenarios, creating a fair basis for comparison and community progress.

-

By connecting seamlessly to modern simulation platforms such as NVIDIA Aerial Omniverse Digital Twin (AODT) and Sionna, DeepMIMO acts as both an entry point for wireless AI research and a bridge to advanced digital twin development. As João Morais, the PhD student tech lead behind the latest DeepMIMO release, puts it:

“Our goal has always been to make ray tracing data accessible to everyone, and in doing so, to accelerate and unify AI research in wireless.”

5. What’s Next for Wireless AI

Wireless networks are at a turning point. Traditional models cannot handle the scale and complexity of emerging applications, and AI is becoming the only way forward. The next steps in this evolution are already visible:

Network Digital Twins: Physics-based, site-specific virtual replicas of entire networks, used for testing, optimization, and automation before deployment.

Integrated Sensing and Communications (ISAC): Networks that not only transmit data but also sense their surroundings, fusing communications with radar and vision to improve awareness.

Multi-modal data fusion: Combining radio signals with camera or LiDAR inputs to build richer models of the environment, as demonstrated in DeepVerse.

Edge GPU acceleration: As workloads grow heavier, GPUs at the edge will make real-time inference and adaptation feasible.